More and more people are nowadays thinking of cloud migration. The question of “Which cloud provider performs better for my MySQL workload?” is really common but cannot always be easily answered. However, there are ways to come up with an answer. This question also applies when thinking of moving to any provider, not necessarily a cloud one or DBaaS.

More and more people are nowadays thinking of cloud migration. The question of “Which cloud provider performs better for my MySQL workload?” is really common but cannot always be easily answered. However, there are ways to come up with an answer. This question also applies when thinking of moving to any provider, not necessarily a cloud one or DBaaS.

The Problem

The most reliable conclusion can be found if you have a testing environment that is fully identical and can produce the same amount of traffic compared to your production version. In this case, the comparison should be straightforward as what you have to do is point your testing environment against the under-evaluation (cloud) providers, and evaluate the differences in performance. But this is not always easy, as many organizations do not have such environments or it’s not always possible to replay all traffic.

The Idea

In this post, if you don’t already have a testing environment that can produce production-like traffic, I am going to show you one methodology that will help you decide which provider offers better performance for your workload.

First of all, what we need to take into consideration is that finding the most suitable provider to migrate to depends on a few different factors such as:

- Pricing

- Business requirements

- Features and tools provided

- Limitations that may apply

- Technologies supported

- High Availability

- And so on…

There are already many blog posts describing that, and some time ago I wrote one with a high-level overview of RDS and Aurora called “When Should I Use Amazon Aurora and When Should I use RDS MySQL?“.

Assuming that you have already evaluated everything, and came across multiple available options, you may now have to make your final decision; but this is not easy as you don’t really know which of these providers performs better for your workload.

I’m pretty sure that you already know some benchmarking tools such as sysbench, mysqlslap, tpcc-mysql, etc.

Searching around you may find out that there are multiple whitepapers and benchmarks available. And as you most probably already know, one of the major factors when performing a benchmark is the workload, and the workload for every environment is different.

For example, you may come to two totally different benchmark results if you replay different workloads. One benchmark may say that provider X is better but another benchmark may say that provider Y is better. One benchmark may say that provider X provides 20% better performance but another may say that both providers provide the same performance. And this is not strange, because assuming that hardware between the providers is similar, any actual benchmark relies on the workload.

The scenario I am going to present is really simple and based on a single host accepting full traffic, read and write. We will compare how queries collected on the current production master are behaving when replayed against different providers. The logic is similar for every topology, so you could easily do any adjustments to match your environment.

Please be aware that enabling slow logs may introduce some overhead due to the increased IO needs for writing the logs, so enable them with caution. It may also introduce increased needs for disk capacity (depending on the number of logs captured). The log file should ideally reside on a separate partition or on a partition that is not used by the main function of the server.

Prerequisites

Let’s say that we have to choose between two providers, provider A and provider B. For doing the performance evaluation, we will need the following:

- Three hosts with identical hardware specs. One located at your current provider, another hosted at provider A, and the last one hosted at provider B. Hardware specs do not necessarily need to match your current masters but should be the ones you are attempting to move to.

- MySQL/Percona Server for MySQL/MariaDB (depends on what you currently have) – same version and configuration to your production host – installed on all three hosts above

- The same exact dataset for all three hosts above

- A sample of queries ran against your master

- A tool that will replay these queries against the three hosts above

Tasks one, two, and three should be straightforward to do on your own so I will only focus on the remaining ones.

To collect the queries performed against your master, you need to enable slog logs and set an appropriate sampling (if applicable). The suggested settings are:

- slow_query_log = ON

- long_query_time = 0

- log_output = FILE (or empty which defaults to FILE)

- log_slow_rate_limit = 100. To allow us to collect more queries this should be further lowered after initial configuration. This configuration variable applies to Percona Server for MySQL and MariaDB only.

If you are using Percona Monitoring and Management Query Analytics, the settings above may already be enabled so you may only have to temporarily adjust them. In any case, you should cross-check if this is the case and adjust as needed because your settings may not match the ones suggested.

With the settings above you are going to log every single query (long_query_time=0) with a sampling of 1/100 (log_slow_rate_limit = 100). Sampling can be further adjusted i.e. 1 so we can capture all queries.

There is no magic number for how many slow logs to collect or what the better timeframe is to collect slow logs. It depends on your workload and we usually prefer a peak time, i.e. the busiest window during a normal business day, a 24-hour window, or times when reoccurring normal jobs like end of month jobs, daily batch processing, billing, etc., so we can collect as many logs as possible.

The Benchmark

Having the slow logs, we need to replay them and collect the metrics. We will replay them using the pt-upgrade tool which is part of percona-toolkit. What you need to have in mind is that pt-upgrade does not offer a concurrency option, so all queries will be sequentially replayed.

Please be sure that, while performing the pt-upgrade operations, none of the three hosts of the above setup is taking any traffic other than the one we are going to generate, as otherwise, the results will not be reliable.

Steps to replay traffic:

We first need to run pt-query-digest on the slow logs we collected (let’s say slow.log). The file that we are going to run pt-query-digest against is the one specified by slow_query_log_file configuration variable.

1 | time pt-query-digest --sample 50 --no-report --output slowlog slow.log > slow_log_50_samples.log |

This way, we will export 50 samples per query, which is enough. The slow_log_50_samples.log will now be used for the benchmark.

On every host, we need to run pt-upgrade twice. The first execution will ensure that our InnoDB buffer pool has been warmed up while the second one will be the actual execution.

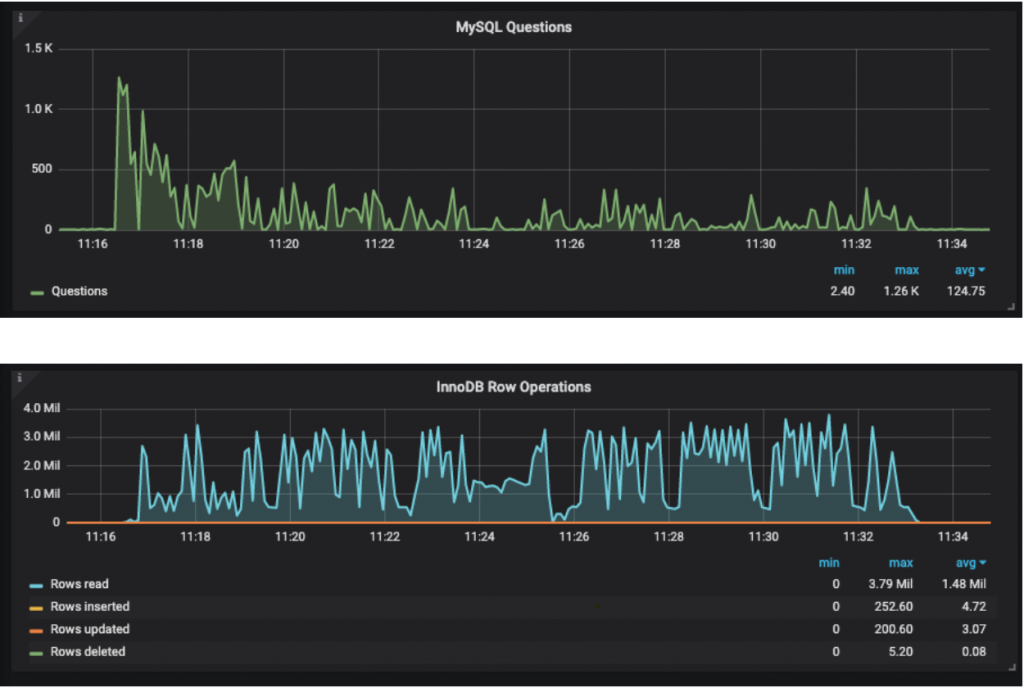

For the purposes of this blog, I’m going to share some results from a benchmarking that I recently did.

On the testing instance on our current provider:

1 | time pt-upgrade h=localhost --max-examples=1 --save-results upc_RO_1 slow_log_50_samples.log |

And again specifying a new directory,

1 | time pt-upgrade h=localhost --max-examples=1 --save-results upc_RO_2 slow_log_50_samples.log |

where slow_log_50_samples.log is the processed slow logs that we collected.

With the example above we’ve replayed all the queries and we saved the results to a folder named “upc_RO_2” (we are mostly interested in the second execution which is after warming up the InnoDB buffer pool).

We now need to do exactly the same for the two hosts that we are evaluating. Run one time to warm the InnoDB buffer pool and another for the actual benchmark. This time we won’t use the slow logs as a source, but rather the results from the first execution. For further details on how pt-upgrade works and all the available options, please check the official pt-upgrade page.

On provider A:

1 | time pt-upgrade upc_RO_2/ h=localhost --max-examples=1 1> GCP_RO_1_results.out 2> GCP_RO_1_results.err |

And again:

1 | time pt-upgrade upc_RO_2/ h=localhost --max-examples=1 1> GCP_RO_2_results.out 2> GCP_RO_2_results.err |

On provider B:

1 | time pt-upgrade upc_RO_2/ h=localhost --max-examples=1 1> EC2_RO_1_results.out 2> EC2_RO_1_results.err |

And again:

1 | time pt-upgrade upc_RO_2/ h=localhost --max-examples=1 1> EC2_RO_2_results.out 2> EC2_RO_2_results.err |

The files we should focus on are:

- GCP_RO_2_results.out which is the comparison between provider A and the current provider

- EC2_RO_2_results.out which is the comparison between provider B and the current provider

The output in each file should end with something like that. Let’s see how it looks for provider B (EC2_RO_2_results)

1 2 3 4 5 6 7 8 9 10 11 | #----------------------------------------------------------------------- # Stats #----------------------------------------------------------------------- failed_queries 60 not_select 0 queries_filtered 0 queries_no_diffs 36149 queries_read 36830 queries_with_diffs 621 queries_with_errors 0 |

Which shows that:

- 36830 queries were read in total

- 60 queries failed to be executed

- 36149 queries had no diffs

- 621 queries had diffs

621 queries out of 36830 queries is almost 1.6%. These queries (1.6%) include both queries that have returned a different set of rows or performing worse so actual degradation might be even less than 1.6%.

So what we know is that at least 98.4% of the queries are performing exactly the same or better than our current provider.

Please note that only queries returning a different set of data or degradation will be reported, as this is what we are actually interested in, so you won’t see something like “queries performing better” in the output above.

Queries that failed and queries with diffs should be further evaluated. These may help us uncover any incompatibilities or problems due to non-supported queries.

To evaluate these queries you should get familiar with how pt-upgrade works. Trying to give some hints only as this is not the goal of this post, the “queries_with_diffs” queries may report differences for various reason such as:

Queries are expected to report different output due to the difference in the hostname.

- monitoring queries

- performance_schema

- information_schema

- use of DATETIME functions such as NOW(), CURDATE().

- different order (missing order by) or order by column has similar values including NULL for multiple rows

Queries_with_diffs also includes the queries which are worse-performing.

Doing a grep for “increase” will help you reveal the queries performing worse, i.e.:

1 2 3 4 5 6 7 | $ fgrep -i increase EC2_RO_2_results.out 0.002259 vs. 0.029961 seconds (13.3x increase) 0.007984 vs. 0.084002 seconds (10.5x increase) 0.005101 vs. 0.051768 seconds (10.1x increase) 0.000886 vs. 0.009347 seconds (10.5x increase) 0.010519 vs. 0.118369 seconds (11.3x increase) |

So, in this case, we have only five examples of queries performing worse. These queries can be found in the EC2_RO_2_results.out file which can be further reviewed.

What you now need to do is repeat the evaluation above for the results for provider A (GCP_RO_2_results.out). After reviewing this as well, it should be straightforward for you to identify which provider offers better performance.

For the scenario above, we compared how our current provider is performing compared to provider A and provider B. Following the same logic, you can make as many combinations as you want. For example, you can compare the current provider to another single provider only, compare provider A to provider B, add another provider C, etc.

Assuming that you performed the READ ONLY testing and you are interested in WRITE performance as well, you can now do a WRITE testing. The only adjustments you’d need to do is:

- Add –no-read-only to the pt-upgrade options

- Perform only once against each node as there is no need to warm the InnoDB buffer pool

- Select a different folder to save the results and a different filename for the statistics

For your evaluation, you should follow the same strategy as you did for the READ ONLY testing.

*** Please note that WRITE testing is destructive to the dataset operation so you must be sure that you are not performing this against your master or any other valuable dataset. While doing a WRITE testing, all queries that you have captured on your master will be replayed and the subsequent write fails may fail. For example, you may have captured an “insert” query but the record may already exist in the snapshot of data you’ve used for the performance testing. So when trying to run this insert again, it may violate a PK.

Conclusion

When planning a migration you should always evaluate performance using your own workload as every workload is unique. pt-upgrade can help you identify incompatibilities, queries that may fail when replayed against different hosts, or performance degradation. It’s a great tool that can help you evaluate different providers and compare performance using your own workload.