In this post, we’ll look at the results from some MySQL 5.7 read-write benchmarks.

In my past blogs I’ve posted benchmarks on MySQL 5.5 / 5.6 / 5.7 in OLTP read-only workloads. For example:

- MySQL 5.7 sysbench OLTP read-only results: is MySQL 5.7 really faster?

- MySQL 5.7 primary key lookup results: is it really faster?

- ProxySQL versus MaxScale for OLTP RO workloads

Now, it is time to test some read-write transactional workloads. I will again use sysbench, and my scripts and configs are available here: https://github.com/Percona-Lab-results/201605-OLTP-RW.

A short description of the setup:

- The client (sysbench) and server are on different servers, connected via 10Gb network

- CPU: 56 logical CPU threads servers Intel(R) Xeon(R) CPU E5-2683 v3 @ 2.00GHz

- sysbench ten tables x 10mln rows, Pareto distribution

- OS: Ubuntu 15.10 (Wily Werewolf)

- Kernel 4.2.0-30-generic

- The storage device is Samsung SM863 SATA SSD, single device, with ext4 filesystem

- MySQL versions: 5.7.12 , 5.6.30 and 5.5.48

InnoDB holds all data in memory, and InnoDB log files are big enough, so there are only IO writes (which happen in the background) and there is no pressure from InnoDB on the IO subsystem.

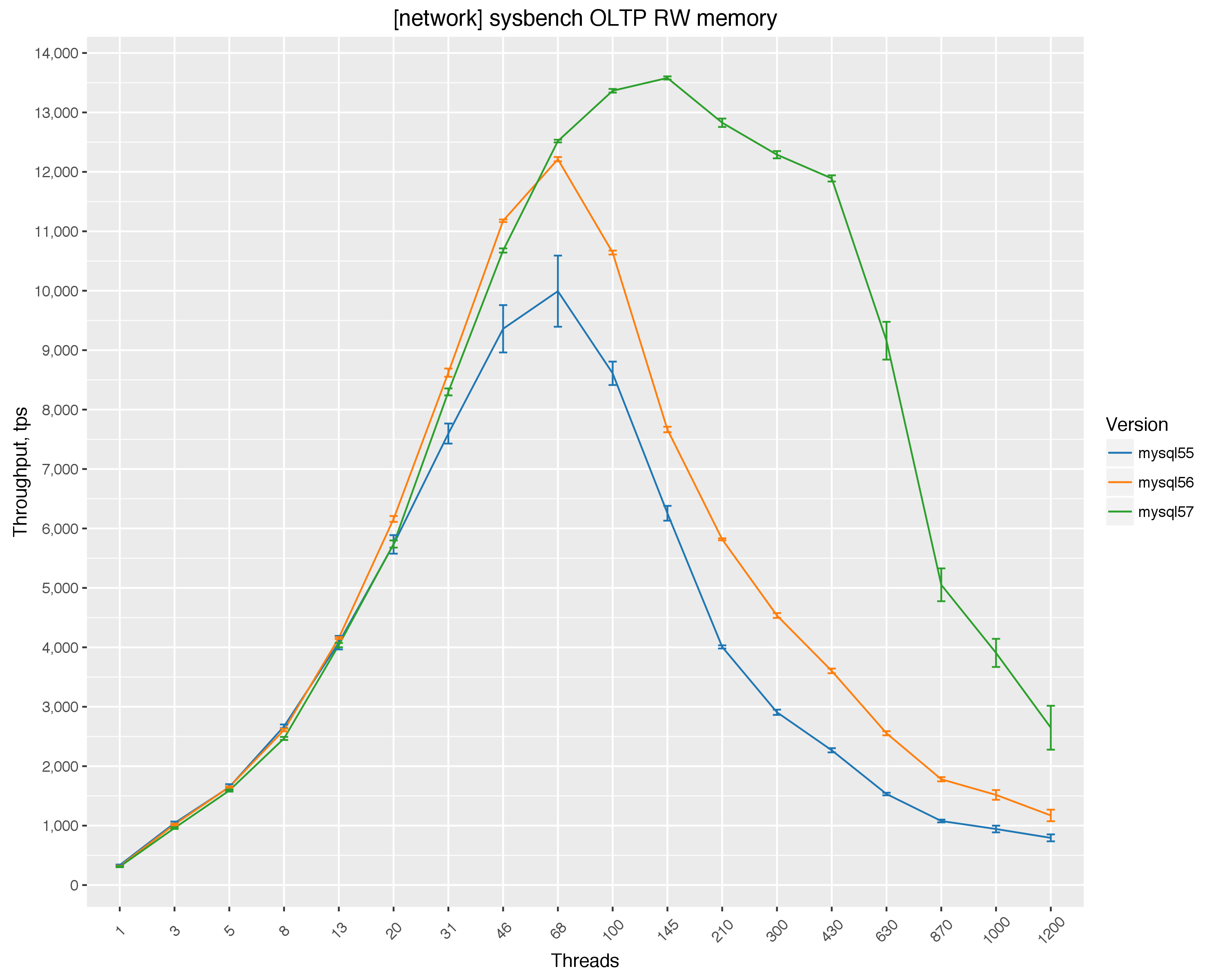

The results looked like the following:

The vertical line shows the variability of the throughput (standard deviation).

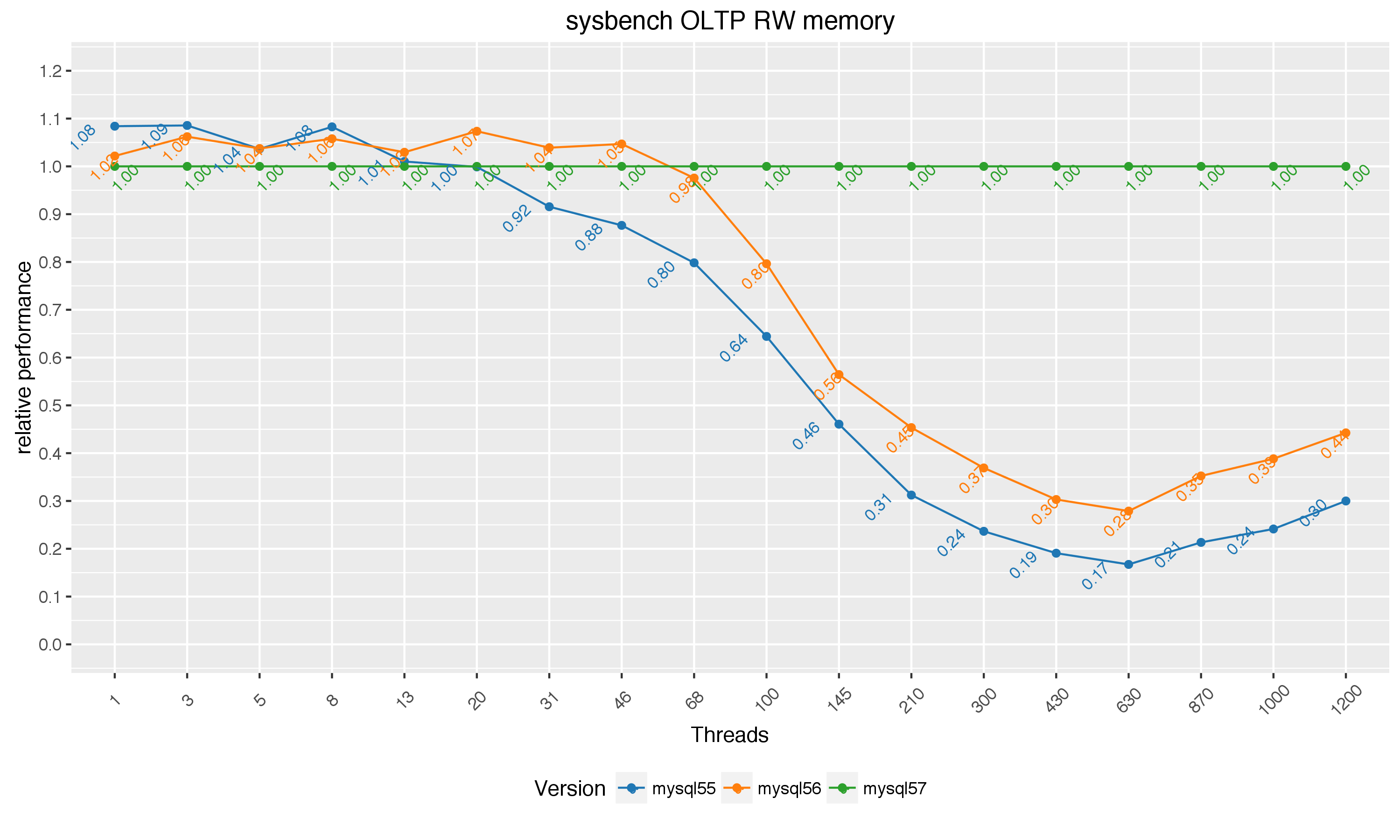

To show the difference for a lower numbers of threads, here is chart with relative performance normalized by MySQL 5.7 (MySQL 5.7 = 1 in the following chart):

So we can finally see significant improvements in MySQL 5.7, it scales much better in read-write workloads than previous versions.

In the lower numbers of threads, however, MySQL 5.7 throughput is still behind MySQL 5.5 and MySQL 5.6, this is where slower single thread performance in MySQL 5.7 and longer execution paths show themselves. The problem with low threads read-write performance is replication. I wonder how a slave 5.7 performs comparing to 5.6 slave – I am going to run this benchmark soon.

Another point to keep in mind is that we still see a “bell shape,” even for MySQL 5.7. After 430 threads, the throughput drops off a cliff. Despite Oracle’s claims that there is no need for a thread pool anymore, this is not the case – I am not able to prevent a throughput drop using magic tuning with innodb_thread_concurrency and innodb_spin_wait_delay. No matter what, MySQL 5.7 is not able to maintain throughput on a high amount of threads (1000+) for this workload.

What can be done in this case? I have two solutions: Percona Server with thread pool functionality, or ProxySQL with connection multiplexing. I will show these results in the next post.

Vadim,

Generally the drop of performance with high amount of threads can come from internal contention (which can be fixed by internal optimizations) as well as by row locks, which mainly depends on what transactions you have in flight. Have you looked into how much row lock waits is happening for this workload ? With Pareto distribution I would expect fair amount.

Managing transaction flow efficiently in such case is an interesting problem on its own as suspending thread while it hold row level rocks is a bad idea. I wonder if

– Does innodb_thread_concurrency prioritize threads which have row level locks ?

– What do Percona Thread Pool (or any other) does in this case ?

– How does ProxySQL handle it ?

Peter,

I posted results with ProxySQL and thread pool here

https://www.percona.com/blog/2016/05/19/fixing-mysql-scalability-problems-proxysql-thread-pool/

Would partitioning the tables and setting transaction isolation to serializable help, as long as there were no cross partition accesses?

David,

As this is pareto distribution (80% of requests hit 20% of the same data), I do not think partitioning will help, as there is contention for the “hot” data.

why use SM863? as I konw, SM863 is good at seq read & write, and PM863 is good at random read & write.

jk,

from this http://www.anandtech.com/show/9455/samsung-releases-pm863-sm863-enterprise-sata-ssds-up-to-384tb-with-3d-vnand I read that SM863 is much better with random writes, no?

The same article says “Due to the more durable MLC V-NAND, the SM863 is mostly aimed for write-intensive applications that includes use cases such as online transaction processing (OLTP) and financial services, whereas the PM863 is targeted at read and mixed IO workloads, such as media streaming.” which I take that SM863 is more suitable for database workloads.